Projects

Surrender(d)

Surrender(d)

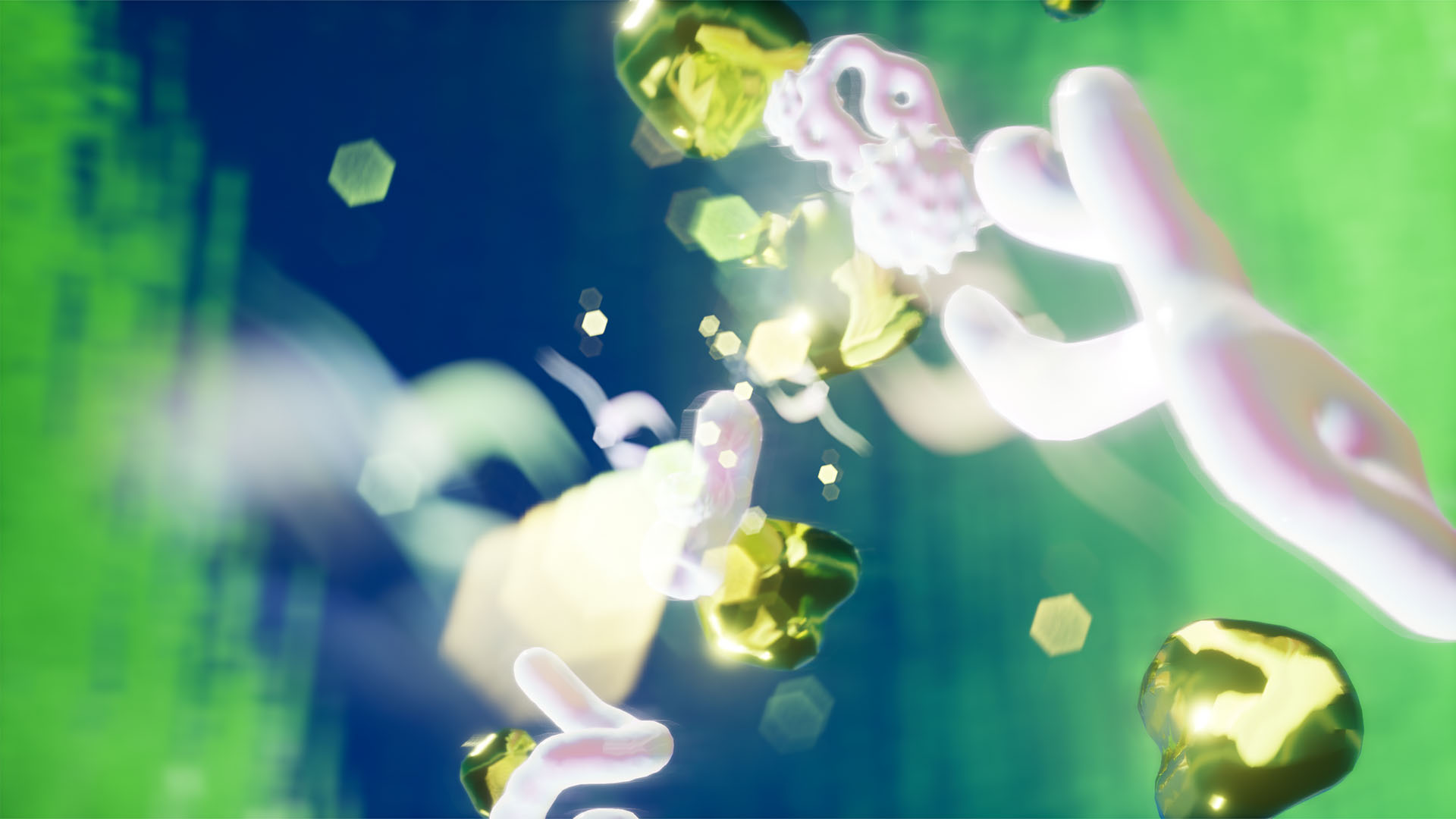

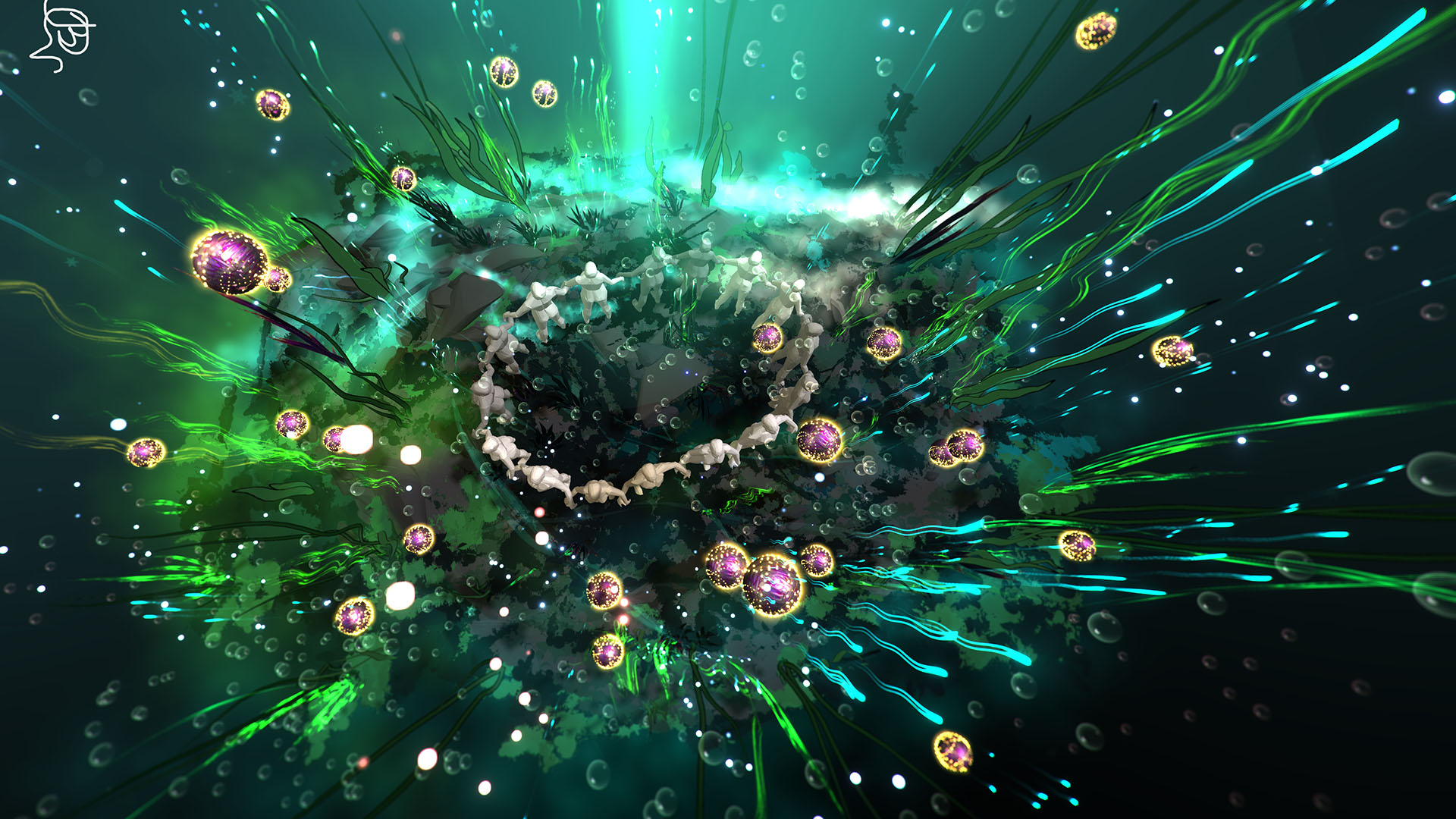

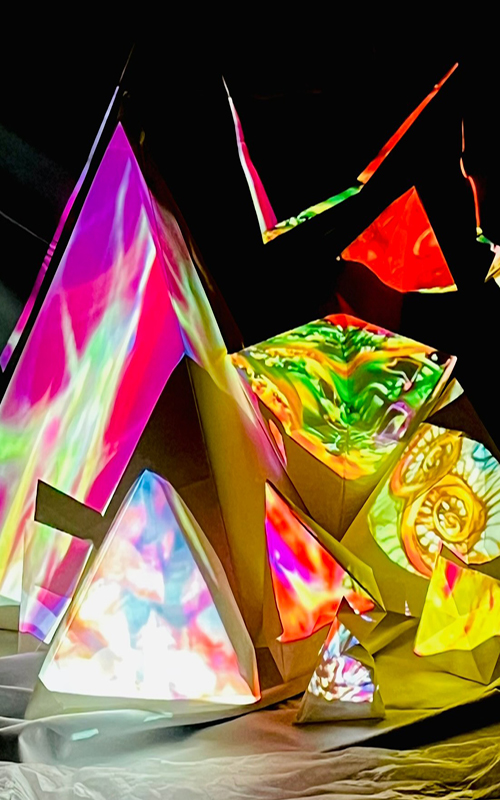

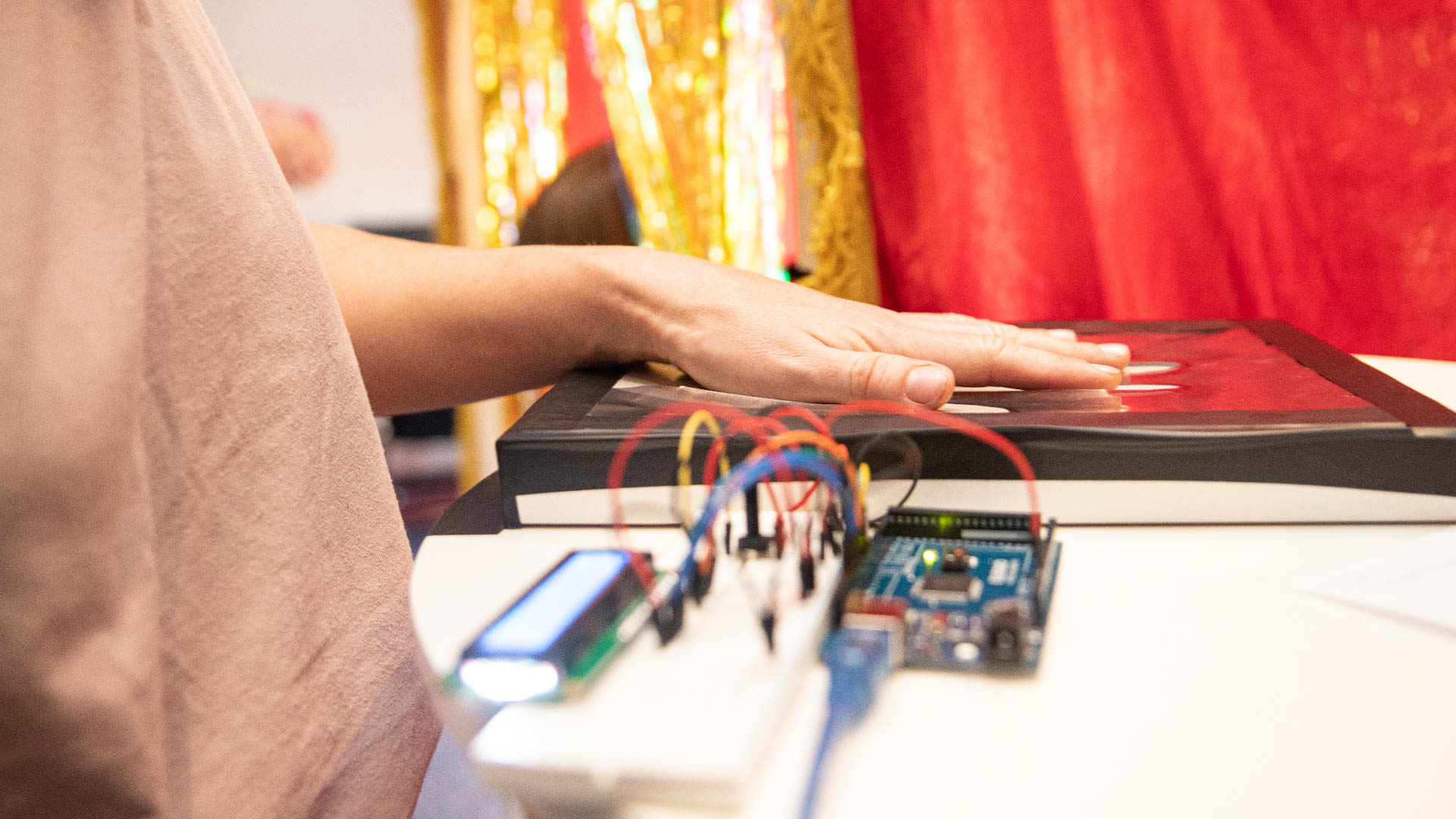

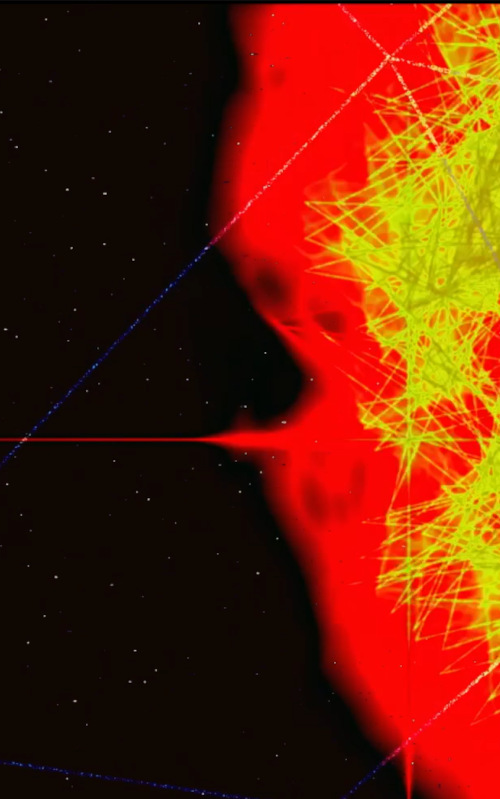

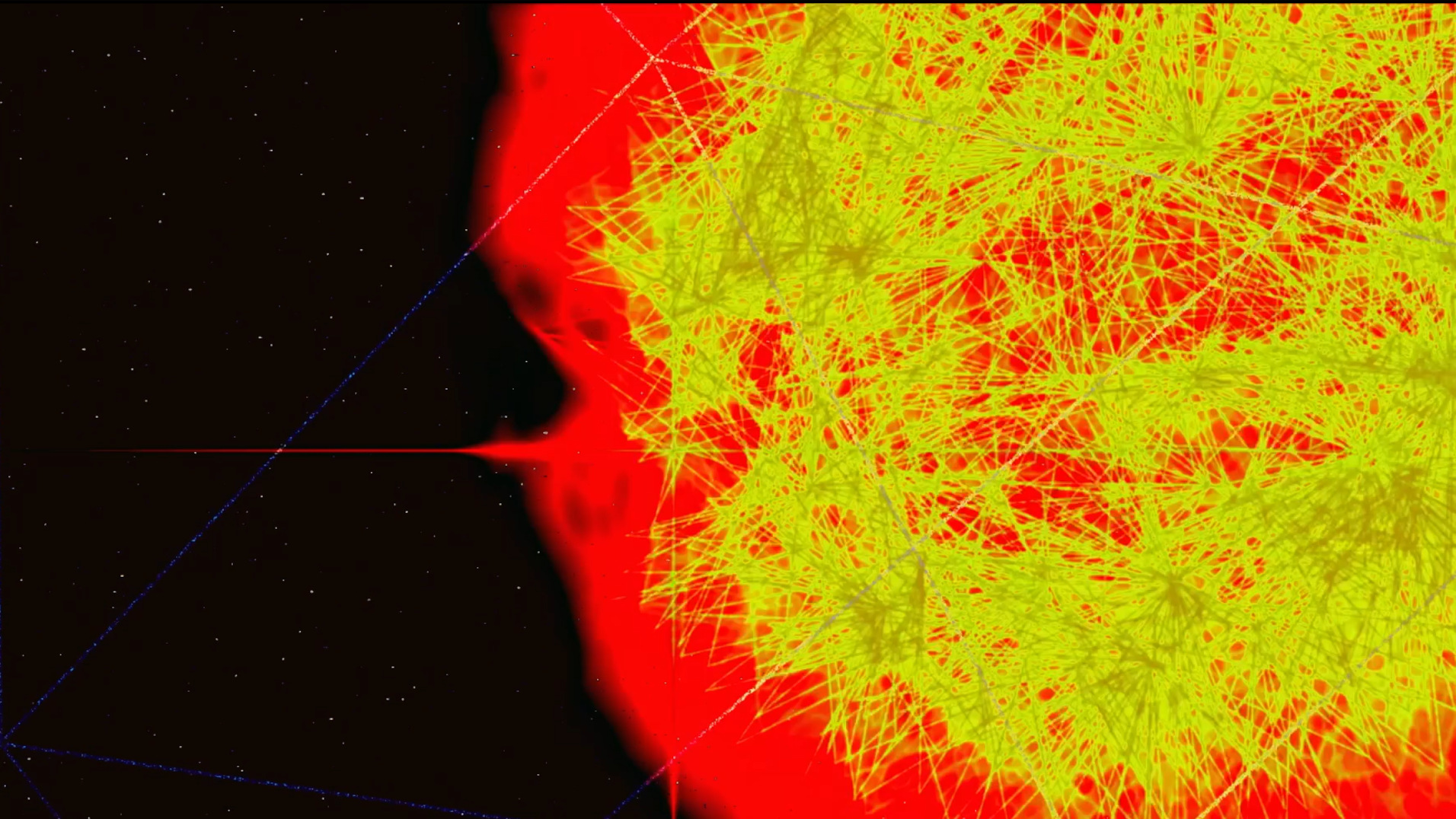

This project began as an exploration of how breath and subtle movement could be translated into real-time generative visuals and sound.

I experimented with building a custom breath sensor, linking it to TouchDesigner, and shaping a particle-based visual world that responds directly to airflow.

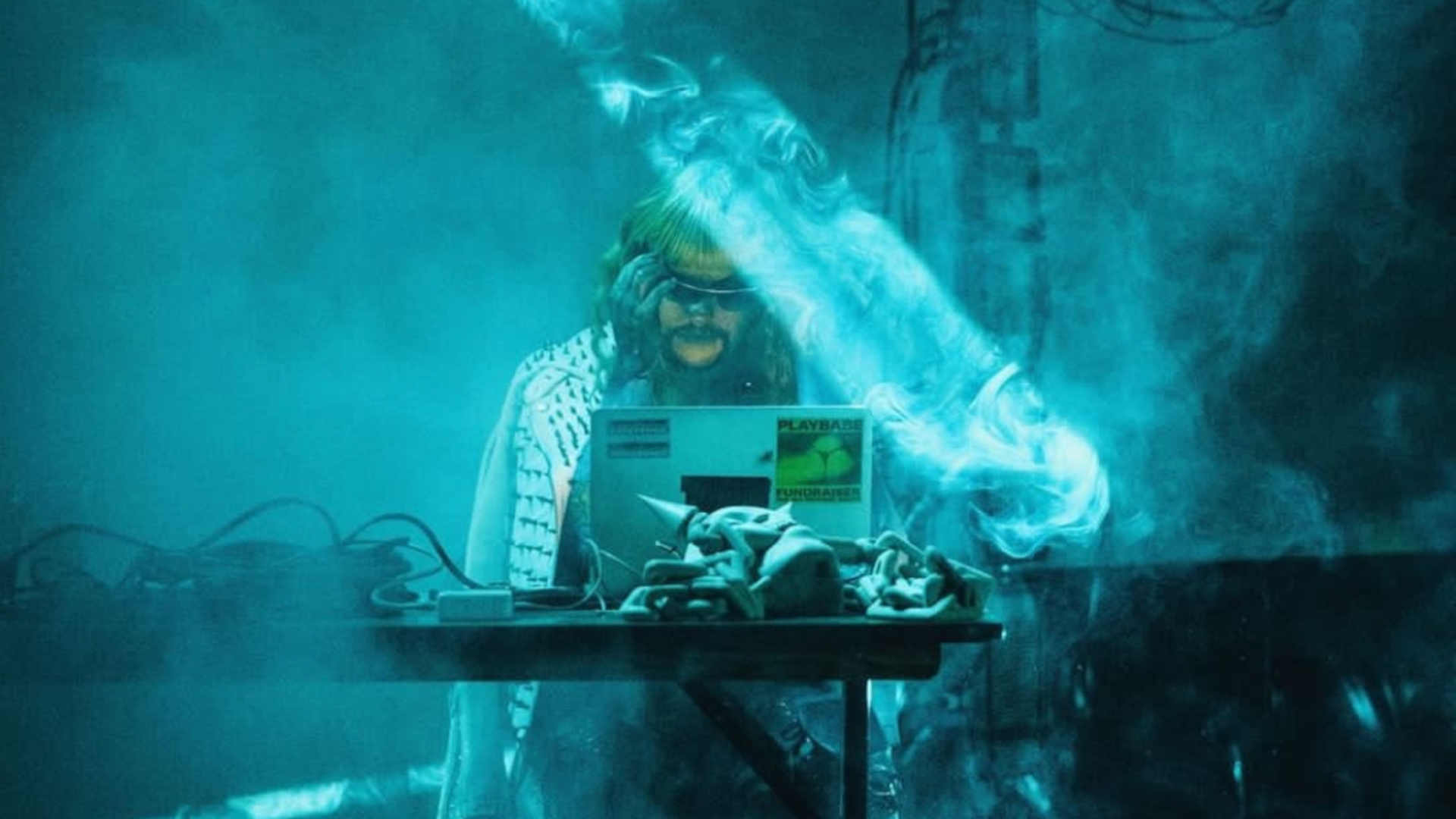

Alongside this, I used Ableton Live to create a shifting sonic environment whose depth changes as the virtual camera moves. The aim was to create an interactive installation that feels simple and meditative, while using advanced technology.

Explore

£5,000

Aims of the Project

Surrender(d) explores how sound, movement, and visuals come together in an immersive, interactive space.

The project aims to test how breath-based input can drive real-time generative visuals and sound. I set out to build and refine a custom sensor, integrate its data into TouchDesigner, and design a responsive particle system shaped by airflow intensity.

How did they do that?

To build surrender(d), I created a custom breath sensor that converts airflow into digital data. The hardest part was getting stable readings.

In TouchDesigner, I generated particles arranged in a sphere and added a subtle force so the particles keep drifting even when no one interacts, giving the piece a sense of quiet life.

The sound, made in Ableton Live, responds to the breath intensity and the camera’s position.

Not everything worked: sensors broke, and some ideas were too complex. Simplifying and focusing on the core idea breath and presence helped me move forward.

Video Credits:

- Video editing – Teles

- Assistance – Dora Zo

"The programme enabled me to explore new tools, test my ideas, and push Surrender(d) far beyond what I could have done alone."